Perception SDK: Accelerating the Journey from Pixels to Insights

Perception SDK: Accelerating the Journey from Pixels to Insights

Perception

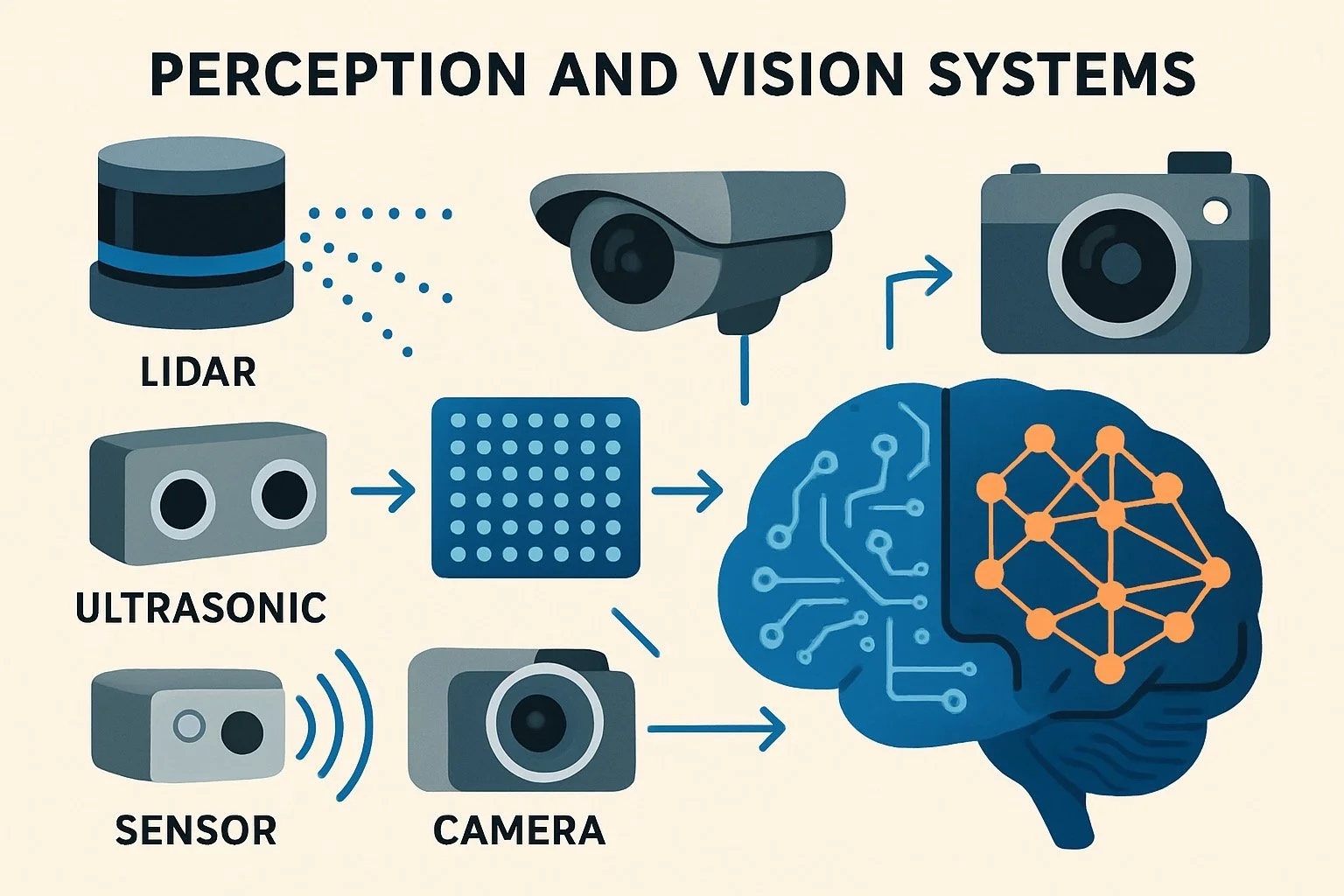

You’ve probably heard of Tesla’s autonomous vehicles. Ever wondered how they understand their surroundings? The answer lies in perception that is the ability of machines to interpret the environment through vision and sensors. In robotics, perception is what enables a system to “see” and make sense of the world around it.

Software Development Kit (SDK)

Modern AI and robotics use advanced algorithms for vision-based applications such as object detection, tracking, mapping, and autonomous navigation. Fascinating, isn’t it? The good news is that we can build such systems ourselves. This is made possible through a Software Development Kit (SDK) which is a collection of documentation, tools, and libraries that allow us to learn, test, and develop perception-driven applications.

Perception SDK

A Perception SDK generally includes components necessary for AI applications such as mapping, autonomous navigation, and vision-based tasks like object detection, segmentation, and pose estimation. Depending on its complexity, an SDK may cover some or all the following:

Sensor Integration – Cameras, LiDAR, and depth sensors are the backbone of perception systems. A good SDK makes integration modular, efficient, and suitable for real-time systems like self-driving cars.

Computer Vision Modules – Once sensors are connected, perception tasks such as detection, tracking, segmentation, and pose estimation can be implemented. Effective SDKs follow a modular approach, enabling easy integration with platforms like ROS and simulation tools.

3D Perception – In robotics, mapping and navigation are one of the most important applications. Mapping allows a robot to build an model (map) of its environment, while navigation enables it to move from point A to B autonomously. Common mapping algorithms include Gmapping, Hector Mapping, and ORB-SLAM.

Sensor Fusion – To improve accuracy, multiple sensors are combined that is called sensor fusion. For example, wheel encoders provide odometry data, which can be enhanced by combining with IMU sensors. Similarly, visual SLAM with cameras can be combined with LiDAR for richer environmental mapping.

Benefits of Perception SDKs

The best thing you can offer to a developer is a well-designed, modular and efficient SDK for any application. It saves time by eliminating the need to build everything from scratch, allowing developers to focus on their application logic rather than low-level implementations. From student-level prototypes to commercial products perceptions SDKs help to accelerate the development of applications.

Key advantages of using Perception SDKs include:

Reduce development time: eliminates the need to rewrite the core and frequently used algorithms.

Optimized, ready-made modules: Reliable and modular algorithms for vision and perception tasks.

Hardware acceleration: Efficient use of GPU/CPU resources for real-time performance in scenarios of different application requirements.

Seamless integration: Modular design for compatibility with ecosystems like ROS, Nvidia Isaac and simulators like Gazebo and more.

Faster prototyping and experimentation: Enables quick testing of devices, sensors, algorithms and new ideas.

Applications of Perception SDKs

Perception SDKs power many of today’s cutting-edge technologies, enabling machines and robots to sense, understand, and act on their environment. They are the backbone of advanced applications including self-driving cars, household service robots, advanced surveillance systems, medical imaging solutions, and a wide range of engineering applications. Below are some of the most prominent use cases:

Autonomous Vehicles: One of the hottest topics for research in this modern era is development of self-driving vehicles. Famous companies like Tesla, Waymo and Cruise use perception algorithms for lane detection, pedestrian tracking, traffic sign recognition and obstacle avoidance in their vehicles to drive safely in complex and unpredictable environments.

Household Robots: Robot such as iRobot’s Roomba, Ecovacs Deebot and Roborock uses advanced 3D perception and mapping to create the map of house and then uses autonomous navigation to clean the robot autonomously.

Educational Robots: Educational robots are among the key beneficiaries of perception SDKs, as these tools enable students and researchers to demonstrate, develop, and test AI and robotics applications in real-world scenarios. Humanoid robots such as NAO, Pepper, and Romeo from SoftBank serve as excellent platforms for teaching human–robot interaction and cognitive robotics. On the other hand, mobile robots like Jackal, TurtleBot, and E-Puck are widely used in laboratories and universities for experiments in navigation, mapping, and perception, making them popular choices for hands-on learning.

Drones and Aerial Robots: When it comes to advanced surveillance systems, drones and aerial robots can’t go unnoticed. Drones use powerful perception SDKs for mapping, surveillance, agriculture and even delivery services. DJI is one of the best companies in the market famous for making professional drones. These drones use vision-based obstacle avoidance, aerial mapping and object tracking for performing navigation safely in complex environments.

Famous Perception SDKs

When it comes to perception or any other field, there isn’t just one SDK to cover all the applications and requirements. In fact, there are thousands of SDKs and are being developed continuously, because every developer, researcher, or company tries to build AI-powered applications and often shares their work with the community. Each SDK brings something unique to the community for end users. Some focus on computer vision, others on robotics or 3D perception. While all of them are valuable in their own way, some of the SDKs have gained wide popularity for their reliability, active ecosystems, and ease of use. Here are some of the most well-known perception SDKs you should know about:

OpenCV (Open Source Computer Vision Library): This is one of the most widely used frameworks for learning, testing, and developing image processing and computer vision applications. It comes with a rich collection of algorithms for tasks such as object detection, tracking, and image segmentation, while also supporting more advanced perception applications. Developers often pair this framework with other AI and robotics tools to streamline development and build more powerful systems.

NVIDIA Isaac SDK / Isaac ROS: This SDK is developed by NVIDIA for accelerating applications related to AI based perception. It offers GPU-accelerated modules for various applications including object detection, 3D perception and navigation. It is compatible with ROS (Robot Operating System) ecosystem and has support for simulation tools like Isaac SIM.

Intel RealSense SDK: Intel designs its powerful RealSense depth cameras which can be used to advanced perception applications including depth estimation, depth sensing, 3D scanning, gesture tracking and visual mapping. Depth cameras are very powerful and affordable compared to LiDARs for depth applications. Along with cameras they offer its well documented SDK from testing their devices to practical applications development.

Google MediaPipe: A lightweight framework with rich features of perception algorithms specifically designed for real-time and mobile applications. It includes variety of perception applications but specifically famous for pose estimation, hand tracking and facial landmark detection.

ROS Perception Stack: ROS is one of the most famous and useful ecosystems for testing, developing and deploying advanced robotics applications. It has a dedicated and powerful stack for perception stack which includes 2D/3D object recognition, point cloud processing (PCL), and SLAM. Many developers choose this for its powerful community and support, for instance I have developed a small perception SDK with name ROS2CV for controlling robots using perception. ROS2CV includes face recognition, object recognition, hand tracking, fingers detection and pose estimation.

Limitations

There is no master key in this world that can open all locks, and this same thing applies to SDKs. While every SDK is powerful for developing AI applications but comes with some limitations. Some of the SDKs are optimized for computer vision but do not provide support for 3D perception, while some are great when integrating with robotics applications but may not be ideal for lightweight or mobile applications. Hardware compatibility, limited documentation, and dependency on specific ecosystems can also limit their applicability. In real-world applications, developers often have to combine multiple SDKs or customize the available SDK to develop AI applications with desired outcomes and features.

Common Limitations of Perception SDKs:

Hardware Dependency: Many SDKs are designed for specific sensors such as depth cameras, LiDAR or GPUs, which limits the compatibility with some systems containing other sensors.

Ecosystem Dependency: Some SDKs work best only within their parent ecosystem, for example ROS perception stack may not perform optimal in Nvidia ecosystem and vice versa. This dependency reduces the flexibility of the SDK specially when integrating with other ecosystems.

Scalability Challenges: Lightweight SDKs such as Google Mediapipe may not scale well for large and complex applications. And same can be said for complex SDKs performance in lightweight applications.

Documentation and Community Gaps: Limited resources or small user bases can slow down adoption and troubleshooting.

References

[3] https://medium.com/analytics-vidhya/perception-in-self-driving-cars-7424e20b77c7

[4] https://www.dreametech.com/blogs/blog/home-robots-smart-cleaning-devices

[5]https://litslink.com/blog/ai-in-surveillance-systems-how-to-empower-security-solutions-with-ai

[6] https://www.myesr.org/ai-blog-tag/medical-imaging/

[7]https://www.whalesbot.ai/blog/revolutionizing-education-how-robots-can-help-students-learn

[8]https://enterprise-insights.dji.com/blog/docked-drones-the-future-of-commercial-security

[9] https://github.com/JAnthem9606/ROS2CV

[10] https://developer.nvidia.com/blog/tag/isaac-sdk/

[11]https://developers.googleblog.com/en/introducing-mediapipe-solutions-for-on-device-machine-learning/

[12] https://github.com/ros-perception

[13] https://www.opencv.ai/blog

[14] https://github.com/IntelRealSense/librealsense

[15]https://www.intelrealsense.com/best-known-methods-for-optimal-camera-performance-over-lifetime/

What is Robotic Manipulation & Dexterity and what is the latest in this field

Exploring Robotic Manipulation and Dexterity

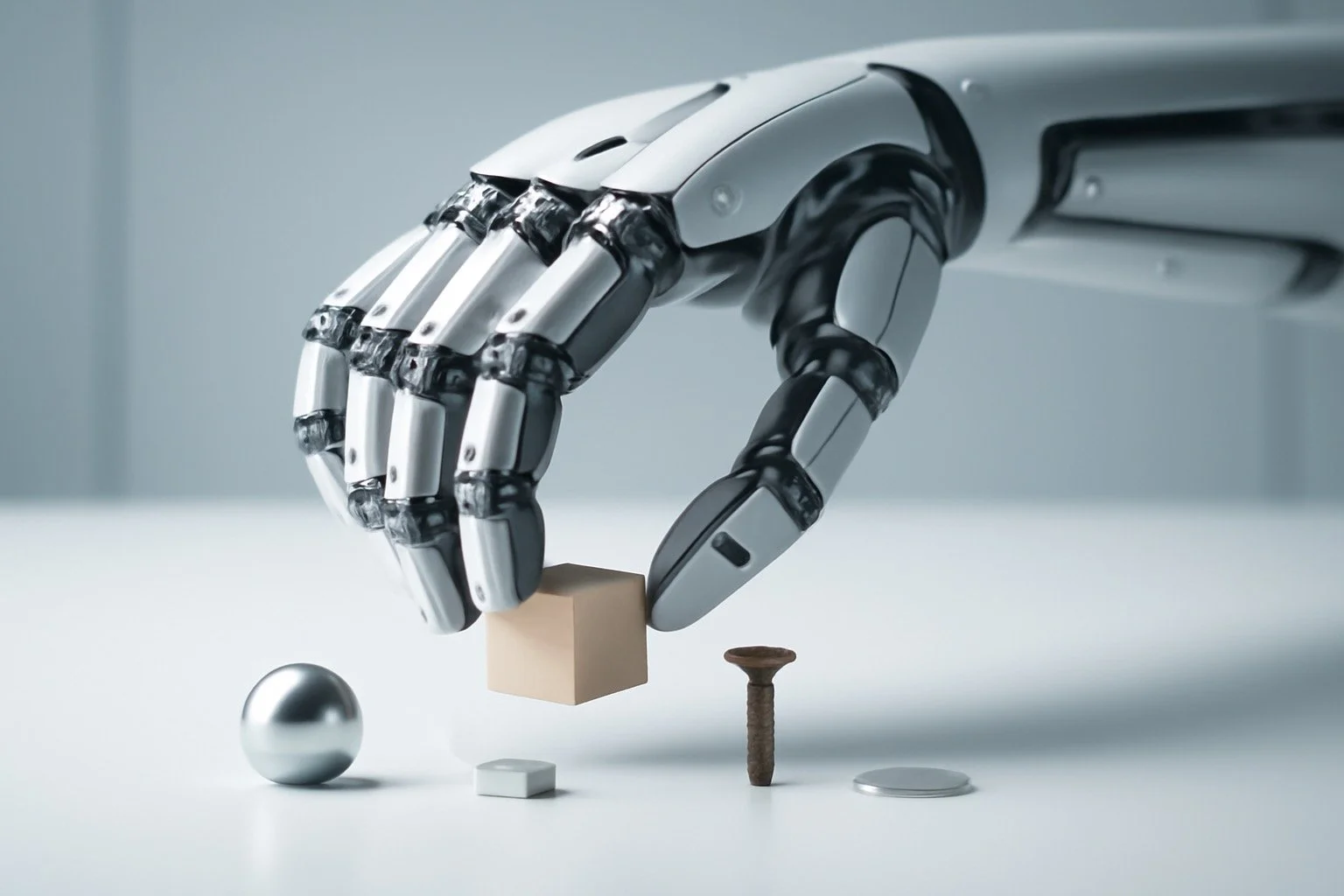

Robotic manipulation and dexterity are fascinating fields that focus on a robot's ability to interact with objects in a way that mimics human performance. This involves tasks like grasping, moving, assembling, or manipulating items with precision and adaptability. Dexterous manipulation takes it a step further, allowing robots to perform intricate tasks such as threading a needle or handling delicate objects.

What Is Robotic Manipulation & Dexterity?

Robotic Manipulation: This involves using robots to grasp, move, and manipulate objects in their environment. It's made possible by a combination of mechanical design (like arms, fingers, and grippers), sensors, and sophisticated software control systems.

Dexterity: This highlights a robot's ability to achieve human-like precision and adaptability with tasks. This includes reorienting objects, fine assembly, and making responsive grip changes in real-time.

The goal is to automate tasks that are easy for humans but challenging for machines, such as adjusting to unknown or unstable objects, using tactile feedback, and quickly generalizing manipulation skills to new scenarios.

Latest Developments (2025)

AI-Driven Learning: Reinforcement learning (RL) and imitation learning now allow robots to learn dexterous skills from simulation and real-world demonstrations, reducing human intervention and sample inefficiency.

Tactile Sensing: Robots such as the F-TAC Hand offer unprecedented tactile sensitivity, enabling nuanced grip control and safe object handling comparable to human skin sensitivity.

Advanced Graspers & Hands: Robotic hands like DEX-EE (for deep learning research) and multi-fingered hands are increasingly robust, adaptable, and capable of surviving harsh environments while manipulating complex objects.

Hardware-Software Co-Evolution: Research groups are creating frameworks where robotic bodies and control policies evolve together based on real-time sensor feedback, accelerating dexterous learning and reliability.

Industry Deployment: The latest algorithms let robots evaluate thousands of manipulation strategies in seconds, enabling deployment in fast-paced logistics and assembly roles.

Collaboration Projects: Leading labs (Stanford, MIT, Google DeepMind) and companies (NVIDIA, Boston Dynamics) are investing in universal dexterous skill frameworks, multi-modal sensors, and embodied intelligence for manipulation tasks.

Robotic manipulation and dexterity are rapidly advancing, blending deep learning, sophisticated hardware, and sensor fusion to meet challenges in automation, healthcare, and real-world problem-solving.

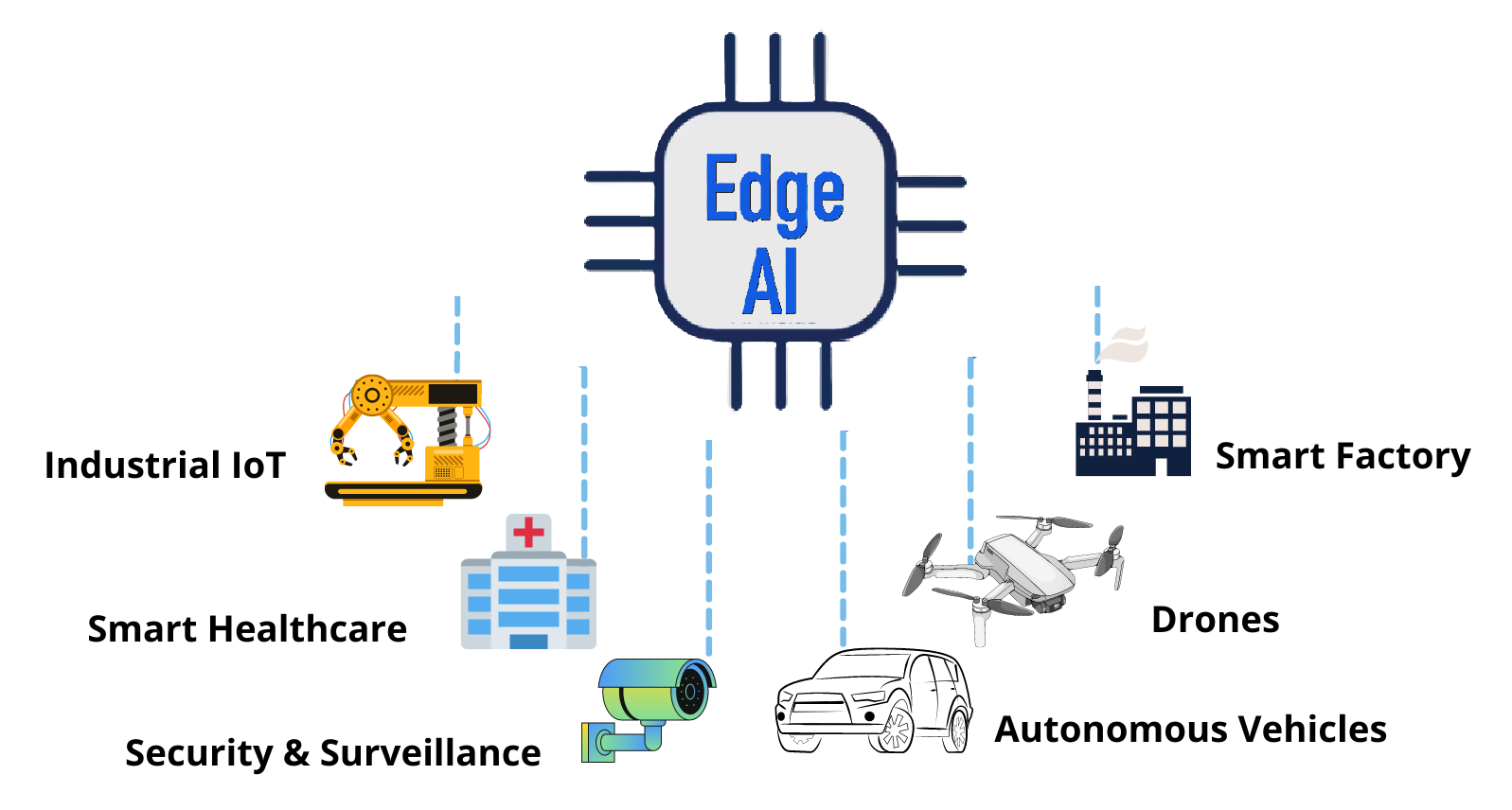

Jetson Orin platform and Edge AI inference plugin

The Jetson Orin platform from NVIDIA is a high-performance, energy-efficient edge computing solution designed for real-time AI inference, robotics, and sensor fusion tasks. The ecosystem supports plug-and-play integration with most popular Edge AI inference plugins and frameworks, making it ideal for robotics developers, AI researchers, and companies seeking accelerated edge intelligence.

Jetson Orin Platform Overview

Jetson Orin modules, including AGX Orin and Orin Nano, deliver up to 275 TOPS of AI performance—up to 8x faster than previous Jetson Xavier hardware. They feature Ampere GPUs with Tensor Cores, robust multi-camera and sensor support, as well as a scalable power envelope ideal for embedded robotics, factory automation, logistics, smart retail, and autonomous vehicles. These platforms enable complex vision and multimodal inference tasks entirely at the edge, minimizing cloud dependency.

Edge AI Inference Plugins & Supported Frameworks

Jetson Orin is compatible with a wide array of AI inference plugins and toolkits:

Popular frameworks: TensorFlow, PyTorch, ONNX, TensorRT, Apache TVM, and TensorFlow Lite facilitate model training, conversion, and efficient deployment.

NVIDIA JetPack SDK includes libraries for CUDA, cuDNN, TensorRT, and supports Ubuntu-based systems. JetPack enables hardware-accelerated AI pipelines, trusted execution (OP-TEE), and native support for multi-sensor fusion.

Reference AI workflows: The platform offers plug-ins for generative AI (LLMs), computer vision (YOLO, ResNet), and robotics tasks with pre-trained models and development kits.

Edge AI Integration and Deployment

Developers use JetPack for rapid deployment, hardware abstraction, and cloud-native workflows. Live model updates can be performed with minimal downtime.

Model optimization supports INT8, FP16, and FP32 precision for improved real-time inference, using tools like TensorRT or TVM for quantization and performance tuning.

Real-world applications include real-time object detection, autonomous navigation, smart surveillance, and multi-sensor robotics.

Key Takeaways

Jetson Orin excels at powering modern Edge AI with high throughput and low latency for complex models.

Support for major AI frameworks and diverse inference plugins allows flexible, modular development for robotics and vision tasks at the edge.

The platform is widely adopted in robotics, retail, smart cities, and industry, offering scalable solutions with best-in-class cost and power efficiency.

For detailed technical architecture, deployment best practices, and plugin integration specifics, referencing the Jetson AGX Orin Technical Brief and JetPack documentation is recommended.

Perception SDK

What “Perception SDK” means in industry (short)

A perception SDK is middleware + libraries that let engineers convert raw sensor data (cameras, LiDAR, radar, IMU) into usable scene representations (2D/3D detection, tracking, semantic maps, occupancy, BEV, etc.). In industry these SDKs bundle sensor abstraction, calibration tooling, optimized inference runtimes, pre-trained models, and integrations with higher stacks (localization, planning). Examples in practice include NVIDIA DRIVE/DriveWorks, NVIDIA Isaac for robots, Intel OpenVINO for optimized inference, and Mobileye’s EyeQ/SDK for camera-first ADAS/AV implementations. NVIDIA Developer+1OpenVINO DocumentationMobileye

How robotics vs. autonomous-vehicle (AV) industries use perception SDKs

Robotics (AMRs, manipulators): emphasis on real-time multi-camera odometry, 3D reconstruction, SLAM, object segmentation for manipulation & navigation; stacks often build on ROS/Isaac ROS + optimized inference (OpenVINO / TensorRT). NVIDIA’s Isaac ROS modules (e.g., Isaac Perceptor) are commonly used to accelerate robot perception on Jetson/AGX platforms. nvidia-isaac-ros.github.ioNVIDIA Developer

Autonomous cars: heavier emphasis on multi-sensor fusion (camera+LiDAR+radar), BEV (bird’s-eye-view) perception, long-range tracking, safety/RT constraints, and automotive-grade middleware (autosafety, ISO 26262 considerations). Industry stacks tend to use vendor SDKs (NVIDIA DRIVE, Mobileye EyeQ + EyeQ Kit) or open-source stacks like Autoware and Autoware.Auto. NVIDIAMobileyeGitLab

Major commercial & open-source SDKs / platforms to study (quick list)

NVIDIA DRIVE / DriveWorks / DRIVE SDK — full AV stack, sensor abstraction, accelerated perception modules and camera pipelines. NVIDIA Developer+1

NVIDIA Isaac / Isaac ROS / Isaac Perceptor — robot-focused libraries, modules for multi-cam, odometry, 3D reconstruction. NVIDIA Developernvidia-isaac-ros.github.io

Mobileye EyeQ & EyeQ Kit — vision-first SoC + SDK widely integrated into OEM ADAS and some AV programs. Mobileye+1

Intel OpenVINO — inference optimization across CPU/GPU/NPUs, used in robotics and automotive prototyping. OpenVINO Documentationamrdocs.intel.com

Autoware / Autoware.Auto — open-source AV perception & planning stack used by research teams and some pilots. GitLabAutoware

Other vendors / ecosystems to check: Qualcomm/SAE platforms (Snapdragon Ride), Tier-1 suppliers (Bosch, NXP), and proprietary stacks at Waymo, Tesla, Cruise (research & limited public docs).

Recent AI advancements shaping perception SDKs (and what they change)

BEV (Bird’s-Eye-View) methods & multi-camera fusion — converting multi-camera imagery into a unified top-down BEV representation for detection, segmentation, and prediction. BEV is now core to many AV perception pipelines. GitHubarXiv

Transformer & spatiotemporal architectures — transformers (temporal + spatial) applied to perception (e.g., RetentiveBEV / BEV transformers) improve long-range context and motion modeling. SAGE Journals

Self-supervised / semi-supervised learning & sim-to-real — methods to reduce expensive labeling, including retrieval-augmented / domain-adaptation approaches to bridge simulation and reality (important for industry dataset scale-up). arXiv+1

Sensor-fusion improvements (radar + LiDAR + camera) and robustness to sensor failure — benchmarks now explicitly test corruption and sensor dropout. arXiv

Edge/embedded acceleration — optimized runtimes (TensorRT, OpenVINO) and model compression are integrated into SDKs so perception can run within automotive/robotic hardware constraints. OpenVINO Documentationamrdocs.intel.com

Key datasets & benchmarks you should include in the research

Waymo Open Dataset — large LiDAR+camera set used widely for perception benchmarks and challenges. arXivWaymo

nuScenes / nuImages — multimodal dataset with camera, LiDAR, radar — useful for sensor-fusion studies. nuscenes.org+1

(Also consider KITTI, Lyft Level 5, Argoverse depending on historical comparisons and licensing.)

Typical industry integration points & engineering concerns

Sensor abstraction & calibration (SDKs provide sensor-abstraction layers, time-sync, calibration tooling). NVIDIA Developer

Real-time constraints & inference optimization (quantization, TensorRT/OpenVINO, specialized SoCs). OpenVINO Documentationamrdocs.intel.com

Safety, explainability, & deterministic behavior — integration with safety frameworks and monitoring (e.g., redundancy, failover when sensors misbehave). (vendor whitepapers and automotive blog posts discuss these tradeoffs). NVIDIAMobileye

Data lifecycle — from collection (publisher vehicle fleets / robots) → annotation → model training → continuous validation in closed-track and limited public roads. Waymo/nuScenes docs and DRIVE blogs are useful references.

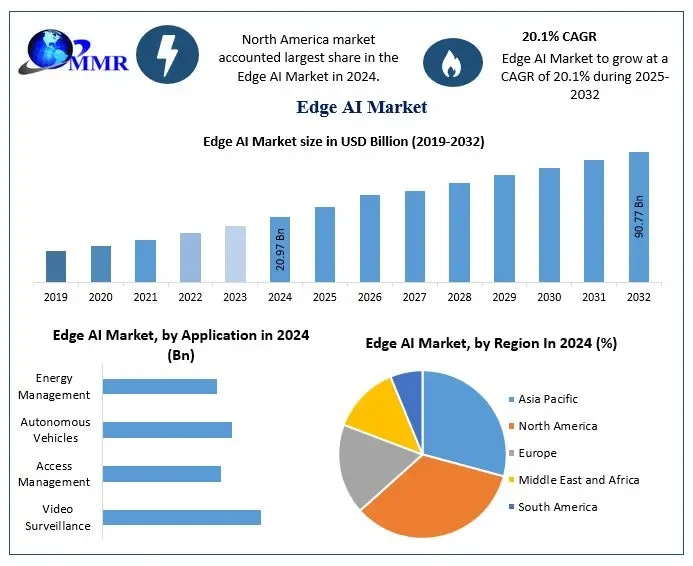

Edge AI inference Module and Market

The Edge AI inference module market in 2025 is booming, offering a wide array of solutions to meet the growing needs of real-time, on-device intelligence. Here’s an easy-to-read overview of the industry and how Robotonomous fits in.

Edge AI Inference Module Market: 2025 Snapshot

Market Leaders

NVIDIA

NVIDIA is a powerhouse in edge AI, especially for high-performance robotics and autonomous systems. Its Jetson Orin platform delivers up to 275 TOPS, making it ideal for demanding tasks like 3D perception and real-time object detection. Jetson AGX Orin kits are widely used for advanced robotics and even large language models, blending big compute power with developer flexibility.

Qualcomm

Specializing in mobile and IoT, Qualcomm’s Snapdragon platforms bring efficient, AI-powered processing to smartphones, AR/VR devices, and AI PCs. The Snapdragon X Elite boasts a robust NPU for 45 TOPS, enabling on-device intelligence in a compact form.

Intel

A long-standing player, Intel offers edge-ready tools and chips such as Movidius and Myriad VPUs for vision, plus Habana Labs Gaudi for scalable AI. These are trusted in drones, cameras, and diverse industrial scenarios.

Specialized Innovators

Hailo

Delivering impressive performance-per-watt, Hailo-8 AI accelerator supports up to 26 TOPS, perfect for smart cameras and industrial inspection.

EdgeCortix

Their SAKURA-II modules pack up to 60 TOPS into small, energy-efficient packages, and their MERA software simplifies model deployment. Ideal for compact robotics and generative AI.

Google Coral / Edge TPU

Google’s Edge TPU is a favorite in IoT and embedded development circles for its super-low-power, fast inference capabilities in smart sensors.

SiMa.AI

Targeting multimodal AI, SiMa.AI’s Modalix platform multiplies performance-per-watt for vision and language tasks.

Lattice Semiconductor

Through partnerships (like with NVIDIA Holoscan), Lattice delivers high-speed, low-power sensor bridging and real-time edge processing.

Industry Dynamics

The market is moving fast, driven by industrial, healthcare, automotive, and smart home applications demanding instant, local decisions.

Full-stack solutions like NVIDIA’s Jetson platform simplify development for developers and companies.

Mobile-first leaders (Qualcomm, MediaTek) power mass-market consumer and IoT devices.

Specialists like Hailo and EdgeCortix innovate in cost and power efficiency, making edge AI more accessible for new use cases.

Robotonomous & Its Edge AI Inference Modules

Robotonomous is actively contributing to the future of edge AI by developing advanced inference modules specifically tailored for robotics.

Real-time, on-board AI: Robotonomous modules run powerful AI models directly on robots, ensuring split-second reaction, perception, and control in the field.

Plug-and-play compatibility: Designed to integrate seamlessly with platforms like NVIDIA Jetson and ROS, these modules speed up development and deployment.

Efficient operation: Robotonomous combines smart hardware selection and software optimization for sustained autonomy with low power draw.

Scalable solutions: Whether for inspection, mobile robotics, or industrial automation, Robotonomous edge modules provide adaptable intelligence for diverse real-world needs.

Industry focus: By partnering with leaders and targeting gaps in existing solutions, Robotonomous ensures its products meet evolving demands for reliability, cost-effectiveness, and field-tested performance.

Conclusion

In the fast-growing Edge AI inference market, leaders like NVIDIA, Qualcomm, and Intel offer broad solutions, while innovators like Hailo and EdgeCortix address specialized needs. Robotonomous stands out by delivering practical, high-performance AI modules for robotics, bridging the gap between cutting-edge hardware and real-world autonomy.

NVIDIA is a powerhouse in edge AI, especially for high-performance robotics and autonomous systems. Its Jetson Orin platform delivers up to 275 TOPS, making it ideal for demanding tasks like 3D perception and real-time object detection. Jetson AGX Orin kits are widely used for advanced robotics and even large language models, blending big compute power with developer flexibility.

Qualcomm

Specializing in mobile and IoT

Qualcomm

Specializing in mobile and IoT, Qualcomm’s Snapdragon platforms bring efficient, AI-powered processing to smartphones, AR/VR devices, and AI PCs. The Snapdragon X Elite boasts a robust NPU for 45 TOPS, enabling on-device intelligence in a compact form.

Intel

A long-standing player, Intel offers edge-ready tools and chips such as Movidius and Myriad VPUs for vision, plus Habana Labs Gaudi for scalable AI. These are trusted in drones, cameras, and diverse industrial scenarios.

Specialized Innovators

Hailo

Delivering impressive performance-per-watt, Hailo-8 AI accelerator supports up to 26 TOPS, perfect for smart cameras and industrial inspection.

EdgeCortix

Their SAKURA-II modules pack up to 60 TOPS into small, energy-efficient packages, and their MERA software simplifies model deployment. Ideal for compact robotics and generative AI.

Google Coral / Edge TPU

Google’s Edge TPU is a favorite in IoT and embedded development circles for its super-low-power, fast inference capabilities in smart sensors.

SiMa.AI

Targeting multimodal AI, SiMa.AI’s Modalix platform multiplies performance-per-watt for vision and language tasks.

Lattice Semiconductor

Through partnerships (like with NVIDIA Holoscan), Lattice delivers high-speed, low-power sensor bridging and real-time edge processing.

Industry Dynamics

The market is moving fast, driven by industrial, healthcare, automotive, and smart home applications demanding instant, local decisions.

Full-stack solutions like NVIDIA’s Jetson platform simplify development for developers and companies.

Mobile-first leaders (Qualcomm, MediaTek) power mass-market consumer and IoT devices.

Specialists like Hailo and EdgeCortix innovate in cost and power efficiency, making edge AI more accessible for new use cases.

Robotonomous & Its Edge AI Inference Modules

Robotonomous is actively contributing to the future of edge AI by developing advanced inference modules specifically tailored for robotics.

Real-time, on-board AI: Robotonomous modules run powerful AI models directly on robots, ensuring split-second reaction, perception, and control in the field.

Plug-and-play compatibility: Designed to integrate seamlessly with platforms like NVIDIA Jetson and ROS, these modules speed up development and deployment.

Efficient operation: Robotonomous combines smart hardware selection and software optimization for sustained autonomy with low power draw.

Scalable solutions: Whether for inspection, mobile robotics, or industrial automation, Robotonomous edge modules provide adaptable intelligence for diverse real-world needs.

Industry focus: By partnering with leaders and targeting gaps in existing solutions, Robotonomous ensures its products meet evolving demands for reliability, cost-effectiveness, and field-tested performance.

Conclusion

In the fast-growing Edge AI inference market, leaders like NVIDIA, Qualcomm, and Intel offer broad solutions, while innovators like Hailo and EdgeCortix address specialized needs. Robotonomous stands out by delivering practical, high-performance AI modules for robotics, bridging the gap between cutting-edge hardware and real-world autonomy

LTA Modular Plug-ins: Turning Language into Action in Software Systems

LTA Modular Plug-ins: Turning Language into Action in Software Systems

In today’s digital world, natural language has evolved into more than just a medium of communication — it’s becoming an interface to control, automate, and optimize the systems we rely on every day. This is where Language to Action (LTA) steps in

LTA Modular Plug-ins: Turning Language into Action in Software Systems

In today’s digital world, natural language has evolved into more than just a medium of communication — it’s becoming an interface to control, automate, and optimize the systems we rely on every day. This is where Language to Action (LTA) steps in

LTA in the context of modular plug-ins is both a conceptual and technical framework where natural language inputs are seamlessly translated into executable actions within software systems. From enterprise automation to digital assistants, this approach is reshaping how humans interact with technology.

What Are LTA Modular Plug-ins?

At their core, LTA plug-ins are modules that bridge natural language and system actions. They:

Interpret natural language commands using NLP (Natural Language Processing).

Map commands to real-world actions or workflows within a system.

Integrate into larger systems such as enterprise tools, digital assistants, or automation platforms.

Work in a modular and scalable way, with each plug-in specializing in a particular domain (e.g., scheduling, reporting, IT operations).

💡 Example:

When a user says:

“Generate a sales report for Q2 and email it to the finance team.”

The LTA plug-in might:

Parse the request with NLP.

Call Salesforce or Excel to generate the report.

Use Outlook or Teams API to email the file to the finance department.

Confirm the action back to the user.

Industry Use Cases

LTA modular plug-ins unlock value across industries by making enterprise systems more intuitive and automation-ready.

1. Enterprise Automation (RPA + NLP)

Employees can trigger back-office processes through simple text or voice commands integrated with UiPath or Automation Anywhere.

2. Customer Service

Agents create tickets or escalate issues without manual data entry:

“Create a ticket for a broken item and escalate it.”

3. Healthcare

Doctors dictate notes that get translated into EHR updates, appointment scheduling, or lab test orders — saving administrative time.

4. Finance

Leaders query systems naturally:

“What were our top 5 expenses last month?”

The plug-in translates and executes the ERP query automatically.

5. Manufacturing

Hands-free control of machinery:

“Shut down production line B and notify the maintenance team.”

6. DevOps / IT Automation

IT staff streamline operations:

“Deploy build 57 to staging.”

“Restart the database server.”

Benefits of LTA Modular Plug-ins

FeatureBenefitModularityFunctions can be swapped or updated independently.Language-AwareUsers interact in plain language — reducing training costs.IntegrativePlug-ins directly talk to APIs, CRMs, databases, and cloud services.Automation-ReadyPerfect fit for no-code/low-code environments.

Key Technologies Enabling LTA

Building effective LTA plug-ins requires contributions from multiple fields:

NLP Engines: OpenAI, Google Dialogflow, Rasa, spaCy

Intent Recognition: Transformer-based models like BERT

Action Executors: APIs, Python scripts, workflow engines

Middleware / Connectors: Node.js, FastAPI, Zapier, cloud automation tools What’s Next for LTA Plug-ins?

The future of Language to Action is racing forward:

Context-Aware Automation: LLM-powered systems (like GPT) that understand deeper nuance.

Immersive Interfaces: Integration with AR/VR and industrial voice assistants for hands-free contexts.

Democratized Automation: Embedded in no-code platforms so anyone can build workflows with natural language.

Security First: Built-in compliance layers to meet the demands of healthcare, finance, and other regulated industries.

Final Thoughts

LTA modular plug-ins are redefining how businesses and individuals interact with technology, transforming natural language into a powerful command line for the enterprise. By combining scalability, modularity, and NLP-driven intelligence, they pave the way for a future where interacting with complex systems feels as natural as having a conversation.

Blog Post Title Three

It all begins with an idea.

It all begins with an idea. Maybe you want to launch a business. Maybe you want to turn a hobby into something more. Or maybe you have a creative project to share with the world. Whatever it is, the way you tell your story online can make all the difference.

Don’t worry about sounding professional. Sound like you. There are over 1.5 billion websites out there, but your story is what’s going to separate this one from the rest. If you read the words back and don’t hear your own voice in your head, that’s a good sign you still have more work to do.

Be clear, be confident and don’t overthink it. The beauty of your story is that it’s going to continue to evolve and your site can evolve with it. Your goal should be to make it feel right for right now. Later will take care of itself. It always does.

Blog Post Title Four

It all begins with an idea.

It all begins with an idea. Maybe you want to launch a business. Maybe you want to turn a hobby into something more. Or maybe you have a creative project to share with the world. Whatever it is, the way you tell your story online can make all the difference.

Don’t worry about sounding professional. Sound like you. There are over 1.5 billion websites out there, but your story is what’s going to separate this one from the rest. If you read the words back and don’t hear your own voice in your head, that’s a good sign you still have more work to do.

Be clear, be confident and don’t overthink it. The beauty of your story is that it’s going to continue to evolve and your site can evolve with it. Your goal should be to make it feel right for right now. Later will take care of itself. It always does.